Introduction

Azure Managed Disks were made generally available (GA) in February 2017. Managed Disks greatly simplify working with Azure Virtual Machines (VM) and Virtual Machine Scale Sets (VMSS). They effectively eliminate the need for you to have to worry about Azure Storage accounts and related VHD constraints/limits. When using managed disks for VMs or VMSS, you select the type of disk storage (SSD or HDD) and the size of disk needed. The Azure platform takes care of the rest. Besides the simplified management aspect, managed disks bring several additional benefits, but I’ll not reiterate those here, as there is a lot of good info already available (here, here and here).

While managed disks simplify management of Azure VMs, they also simplify working with VM images. Prior to managed disks, an image would need to be copied to the Storage account where the derived VM would be created. Doable, but not exactly convenient. With the introduction of managed disks, since the concept of using Storage accounts for disks and images has gone away, there is no need to copy the image. You can now create managed images as the ARM resources. You can easily create a VM by referencing the managed image, so long as the VM and image are in the same region and the same Azure subscription. You can consult the following two articles for detailed documentation on this topic:

- Create a VM from a generalized managed VM image

- Capture a managed image of a generalized VM in Azure

However, what if you need to use the managed image in another Azure subscription (to which you have access)? Or, what if you need to use the managed image in another region? These capabilities are not yet available as part of the platform. However, there are workarounds you can use, with the currently available capability, to facilitate these needs.

In this post, we’ll explore the following two common scenarios:

- Copy a managed image to another Azure subscription

- Copy a managed image to another region

High Level Steps

To get a managed image in one Azure subscription to be available for use in another Azure subscription, there are a series of steps that currently need to be followed. In the near future, I expect this process to greatly be simplified by enhancements to the Azure platform’s managed image functionality. Until then, the high-level steps are as follows:

- Deploy a VM

- Configure the VM

- Generalize (using Sysprep) the VM

- Create an managed image in the source subscription

- Create a managed snapshot of the OS disk from the generalized VM

- Copy the managed snapshot to the target Azure subscription

- Alternative 1 – different region, same subscription

- Alternative 2 – different region, different subscription

- In the target subscription, create an managed image from the copied snapshot

- Optional: from the new managed image in the target subscription, create a new temporary VM

- Delete the snapshot in both the source and target Azure subscription

- Delete the temporary VM created in step #8

Getting Started

For the purposes of this post, I’m going to assume you have already created a VM using managed disks, configured it to your liking (e.g. installing some software, making configuration changes, etc.), and generalized the VM.

Create an Image

Assuming you have a generalized (deallocated) VM, the next step is to create a managed image. It is worth pointing out that, at this time, creating the image is largely irrelevant when trying to copy the image to another region and/or subscription. As you’ll soon see, the artifact that is copied is the snapshot of disk(s) of the source (generalized) VM. The ability copy the image is not yet supported . . . hence this blog post to describe a workaround.

If you already have the Image, you can obviously skip this step. The steps are as follows:

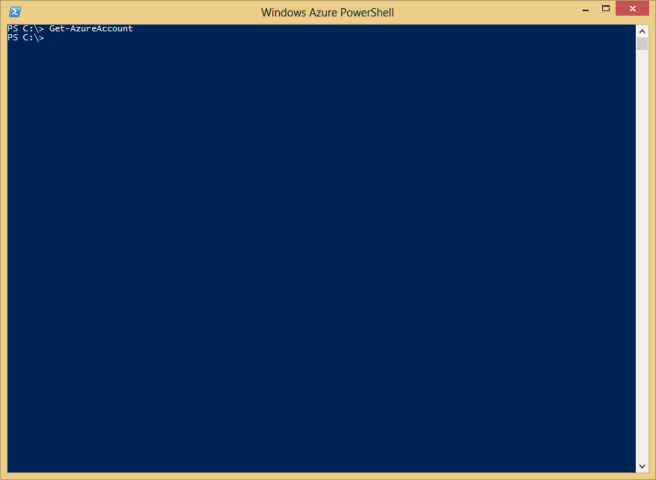

PowerShell

<# -- Create a Managed Disk Image if necessary -- #> $vm = Get-AzureRmVM -ResourceGroupName $resourceGroupName -Name $vmName $image = New-AzureRmImageConfig -Location $region -SourceVirtualMachineId $vm.Id New-AzureRmImage -Image $image -ImageName $imageName -ResourceGroupName $resourceGroupName

Azure CLI 2.0

# ------ Create an image ------ # Get the ID for the VM. vmid=$(az vm show -g $ResourceGroupName -n vm --query "id" -o tsv) # Create the image. az image create -g $ResourceGroupName \ --name $imageName \ --location $location \ --os-type Windows \ --source $vmid

Create a snapshot

Now that you have an image, the next step is to create a snapshot of the OS disk of the source VM. If your image needs data disks, you’ll want to create a snapshot of the data disks as well (not shown below).

PowerShell

<# -- Create a snapshot of the OS (and optionally data disks) from the generalized VM -- #> $vm = Get-AzureRmVM -ResourceGroupName $resourceGroupName -Name $vmName $disk = Get-AzureRmDisk -ResourceGroupName $resourceGroupName -DiskName $vm.StorageProfile.OsDisk.Name $snapshot = New-AzureRmSnapshotConfig -SourceUri $disk.Id -CreateOption Copy -Location $region $snapshotName = $imageName + "-" + $region + "-snap" New-AzureRmSnapshot -ResourceGroupName $resourceGroupName -Snapshot $snapshot -SnapshotName $snapshotName

Azure CLI 2.0

diskName=$(az vm show -g $ResourceGroupName -n vm --query "storageProfile.osDisk.name" -o tsv) az snapshot create -g $ResourceGroupName -n $snapshotName --location $location –source $diskName

Copy the snapshot

The next step is to copy the snapshot to the target Azure subscription. In the following example, the first thing to do is grab the snapshot’s Resource ID. That ID is used to specific the source snapshot when creating the new snapshot.

PowerShell

<#-- copy the snapshot to another subscription, same region --#>

$snap = Get-AzureRmSnapshot -ResourceGroupName $resourceGroupName -SnapshotName $snapshotName

<#-- change to the target subscription #>

Select-AzureRmSubscription -SubscriptionId $targetSubscriptionId

$snapshotConfig = New-AzureRmSnapshotConfig -OsType Windows `

-Location $region `

-CreateOption Copy `

-SourceResourceId $snap.Id

$snap = New-AzureRmSnapshot -ResourceGroupName $resourceGroupName `

-SnapshotName $snapshotName `

-Snapshot $snapshotConfig

Azure CLI 2.0

# ------ Copy the snapshot to another Azure subscription ------ # set the source subscription (to be sure) az account set --subscription $SubscriptionID snapshotId=$(az snapshot show -g $ResourceGroupName -n $snapshotName --query "id" -o tsv ) # change to the target subscription az account set --subscription $TargetSubscriptionID az snapshot create -g $ResourceGroupName -n $snapshotName --source $snapshotId

Alternative: Copy the snapshot to a different region for the same subscription

The previous examples showed how to copy the snapshot to a different subscription, with the restriction being the region for the source and target must be the same. There may be times when you need to get the snapshot to another region. The follow example shows how to copy the snapshot to another region, yet under the context of the same Azure subscription. The big difference here is the need to get at the blob which is the basis for the snapshot. That can be accomplished by getting a Shared Access Signature (SAS) for the snapshot.

PowerShell

# Create the name of the snapshot, using the current region in the name.

$snapshotName = $imageName + "-" + $region + "-snap"

# Get the source snapshot

$snap = Get-AzureRmSnapshot -ResourceGroupName $resourceGroupName -SnapshotName $snapshotName

# Create a Shared Access Signature (SAS) for the source snapshot

$snapSasUrl = Grant-AzureRmSnapshotAccess -ResourceGroupName $resourceGroupName -SnapshotName $snapshotName -DurationInSecond 3600 -Access Read

# Set up the target storage account in the other region

$targetStorageContext = (Get-AzureRmStorageAccount -ResourceGroupName $resourceGroupName -Name $storageAccountName).Context

New-AzureStorageContainer -Name $imageContainerName -Context $targetStorageContext -Permission Container

# Use the SAS URL to copy the blob to the target storage account (and thus region)

Start-AzureStorageBlobCopy -AbsoluteUri $snapSasUrl.AccessSAS -DestContainer $imageContainerName -DestContext $targetStorageContext -DestBlob $imageBlobName

Get-AzureStorageBlobCopyState -Container $imageContainerName -Blob $imageBlobName -Context $targetStorageContext -WaitForComplete

# Get the full URI to the blob

$osDiskVhdUri = ($targetStorageContext.BlobEndPoint + $imageContainerName + "/" + $imageBlobName)

# Build up the snapshot configuration, using the target storage account's resource ID

$snapshotConfig = New-AzureRmSnapshotConfig -AccountType StandardLRS `

-OsType Windows `

-Location $targetRegionName `

-CreateOption Import `

-SourceUri $osDiskVhdUri `

-StorageAccountId "/subscriptions/${sourceSubscriptionId}/resourceGroups/${resourceGroupName}/providers/Microsoft.Storage/storageAccounts/${storageAccountName}"

# Create the new snapshot in the target region

$snapshotName = $imageName + "-" + $targetRegionName + "-snap"

$snap2 = New-AzureRmSnapshot -ResourceGroupName $resourceGroupName -SnapshotName $snapshotName -Snapshot $snapshotConfig

Azure CLI 2.0

az account set --subscription $SubscriptionID snapshotId=$(az snapshot show -g $ResourceGroupName -n $snapshotName --query "id" -o tsv ) # Get the SAS for the snapshotId snapshotSasUrl=$(az snapshot grant-access -g $ResourceGroupName -n $snapshotName --duration-in-seconds 3600 -o tsv) # Setup the target storage account in another region targetStorageAccountKey=$(az storage account keys list -g $ResourceGroupName --account-name $targetStorageAccountName --query "[:1].value" -o tsv) storageSasToken=$(az storage account generate-sas --expiry 2017-05-02'T'12:00'Z' --permissions aclrpuw --resource-types sco --services b --https-only --account-name $targetStorageAccountName --account-key $targetStorageAccountKey -o tsv) az storage container create -n $imageStorageContainerName --account-name $targetStorageAccountName --sas-token $storageSasToken # Copy the snapshot to the target region using the SAS URL imageBlobName = "$imageName-osdisk.vhd" copyId=$(az storage blob copy start --source-uri $snapshotSasUrl --destination-blob $imageBlobName --destination-container $imageStorageContainerName --sas-token $storageSasToken --account-name $targetStorageAccountName) # Figure out when the copy is destination-container # TODO: Put this in a loop until status is 'success' az storage blob show --container-name $imageStorageContainerName -n $imageBlobName --account-name $targetStorageAccountName --sas-token $storageSasToken --query "properties.copy.status" # Get the URI to the blob blobEndpoint=$(az storage account show -g $ResourceGroupName -n $targetStorageAccountName --query "primaryEndpoints.blob" -o tsv) osDiskVhdUri="$blobEndpoint$imageStorageContainerName/$imageBlobName" # Create the snapshot in the target region snapshotName="$imageName-$targetLocation-snap" az snapshot create -g $ResourceGroupName -n $snapshotName -l $targetLocation --source $osDiskVhdUri

Alternative: Copy the snapshot to a different region for a different subscription

The previous example showed how to copy the snapshot to a different region, yet associated with the same subscription. In the following example, we’ll tweak the example script a bit to show copying the snapshot to a different region and a different subscription.

These three examples should cover the scenarios needed to get the snapshot wherever it needs to be. From there, the steps to create the image should be the same, since they all start with the snapshot.

PowerShell

# Create the name of the snapshot, using the current region in the name.

$snapshotName = $imageName + "-" + $region + "-snap"

# Get the source snapshot

$snap = Get-AzureRmSnapshot -ResourceGroupName $resourceGroupName -SnapshotName $snapshotName

# Create a Shared Access Signature (SAS) for the source snapshot

$snapSasUrl = Grant-AzureRmSnapshotAccess -ResourceGroupName $resourceGroupName -SnapshotName $snapshotName -DurationInSecond 3600 -Access Read

# Set up the target storage account in the other region and subscription

Select-AzureRmSubscription -SubscriptionId $targetSubscriptionId

$targetStorageContext = (Get-AzureRmStorageAccount -ResourceGroupName $targetResourceGroupName -Name $targetStorageAccountName).Context

New-AzureStorageContainer -Name $imageContainerName -Context $targetStorageContext -Permission Container

# Use the SAS URL to copy the blob to the target storage account (and thus region)

Start-AzureStorageBlobCopy -AbsoluteUri $snapSasUrl.AccessSAS -DestContainer $imageContainerName -DestContext $targetStorageContext -DestBlob $imageBlobName

Get-AzureStorageBlobCopyState -Container $imageContainerName -Blob $imageBlobName -Context $targetStorageContext -WaitForComplete

# Get the full URI to the blob

$osDiskVhdUri = ($targetStorageContext.BlobEndPoint + $imageContainerName + "/" + $imageBlobName)

# Build up the snapshot configuration, using the target storage account's resource ID

$snapshotConfig = New-AzureRmSnapshotConfig -AccountType StandardLRS `

-OsType Windows `

-Location $targetRegionName `

-CreateOption Import `

-SourceUri $osDiskVhdUri `

-StorageAccountId "/subscriptions/${targetSubscriptionId}/resourceGroups/${targetResourceGroupName}/providers/Microsoft.Storage/storageAccounts/${targetStorageAccountName}"

# Create the new snapshot in the target region

$snapshotName = $imageName + "-" + $targetRegionName + "-snap"

$snap2 = New-AzureRmSnapshot -ResourceGroupName $resourceGroupName -SnapshotName $snapshotName -Snapshot $snapshotConfig

Azure CLI 2.0

az account set --subscription $SubscriptionID snapshotId=$(az snapshot show -g $ResourceGroupName -n $snapshotName --query "id" -o tsv ) # Get the SAS for the snapshotId snapshotSasUrl=$(az snapshot grant-access -g $ResourceGroupName -n $snapshotName --duration-in-seconds 3600 -o tsv) # Switch to the DIFFERENT subscription az account set --subscription $TargetSubscriptionID # Setup the target storage account in another region targetStorageAccountKey=$(az storage account keys list -g $ResourceGroupName --account-name $targetStorageAccountName --query "[:1].value" -o tsv) storageSasToken=$(az storage account generate-sas --expiry 2017-05-02'T'12:00'Z' --permissions aclrpuw --resource-types sco --services b --https-only --account-name $targetStorageAccountName --account-key $targetStorageAccountKey -o tsv) az storage container create -n $imageStorageContainerName --account-name $targetStorageAccountName --sas-token $storageSasToken # Copy the snapshot to the target region using the SAS URL imageBlobName = "$imageName-osdisk.vhd" copyId=$(az storage blob copy start --source-uri $snapshotSasUrl --destination-blob $imageBlobName --destination-container $imageStorageContainerName --sas-token $storageSasToken --account-name $targetStorageAccountName) # Figure out when the copy is destination-container # TODO: Put this in a loop until status is 'success' az storage blob show --container-name $imageStorageContainerName -n $imageBlobName --account-name $targetStorageAccountName --sas-token $storageSasToken --query "properties.copy.status" # Get the URI to the blob blobEndpoint=$(az storage account show -g $ResourceGroupName -n $targetStorageAccountName --query "primaryEndpoints.blob" -o tsv) osDiskVhdUri="$blobEndpoint$imageStorageContainerName/$imageBlobName" # Create the snapshot in the target region snapshotName="$imageName-$targetLocation-snap" az snapshot create -g $ResourceGroupName -n $snapshotName -l $targetLocation --source $osDiskVhdUri

Create an Image (in target subscription)

Once the snapshot has been copied to the target Azure subscription, the next step is to use the snapshot as a basis for creating a new managed image. Be sure to proceed to the next step (Create a temporary VM from the Image) don’t stop here!

PowerShell

<# -- In the second subscription, create a new Image from the copied snapshot --#>

Select-AzureRmSubscription -SubscriptionId $targetSubscriptionId

$snap = Get-AzureRmSnapshot -ResourceGroupName $resourceGroupName -SnapshotName $snapshotName

$imageConfig = New-AzureRmImageConfig -Location $destinationRegion

Set-AzureRmImageOsDisk -Image $imageConfig `

-OsType Windows `

-OsState Generalized `

-SnapshotId $snap.Id

New-AzureRmImage -ResourceGroupName $resourceGroupName `

-ImageName $imageName `

-Image $imageConfig

Azure CLI 2.0

az account set --subscription $TargetSubscriptionID snapshotId=$(az snapshot show -g $ResourceGroupName -n $snapshotName --query "id" -o tsv ) az image create -g $ResourceGroupName -n $imageName -l $location --os-type Windows --source $snapshotId

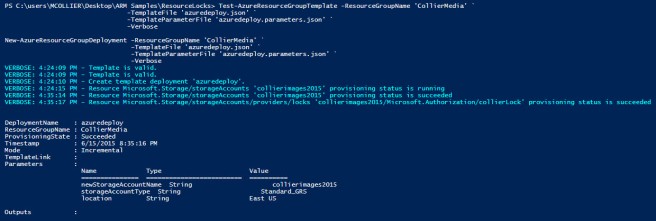

Optional: create a temporary VM from the Image (in target subscription)

Earlier, when you created the snapshot and copied it to the target Azure subscription, you may have noticed the process went relatively quick. One reason for this is how Azure copies the data – it uses a copy-on-read process. Meaning, the full dataset isn’t copied until it is needed. To trigger the data to be fully copied, a VM can be created. The VM created in this step is used to trigger the data transfer, and then we can safely delete the VM and snapshots. This step can be considered optional – as the first time a VM is created from the snapshot, doing so will ensure the data is fully copied.

In the example below, I’m using an ARM template to provision the new (temporary) VM. This template is very similar to this one, expect that I’ve modified the one I’m using to allow for the use of a managed image.

PowerShell

<# -- In the second subscription, create a new VM from the new Image. -- #>

$currentDate = Get-Date -Format yyyyMMdd.HHmmss

$deploymentLabel = "vmimage-$currentDate"

$image = Get-AzureRmImage -ResourceGroupName $resourceGroupName -ImageName $imageName

<# -- Get a random series of letters to help with making a somewhat unique DNS suffix. -- #>

$dnsPrefix = "myvm-" + -join ((97..122) | Get-Random -Count 7 | ForEach-Object {[char]$_})

$creds = Get-Credential -Message "Enter username and password for new VM."

$templateParams = @{

vmName = $vmName;

adminUserName = $creds.UserName;

adminPassword = $creds.Password;

dnsLabelPrefix = $dnsPrefix

managedImageResourceId = $image.Id

}

# Put the dummy VM in a separate resource group as it makes it super easy to clean up all the extra stuff that goes with a VM (NIC, IP, VNet, etc.)

$rgNameTemp = $resourceGroupName + "-temp"

New-AzureRmResourceGroup -Location $region `

-Name $rgNameTemp

New-AzureRmResourceGroupDeployment -Name $deploymentLabel `

-ResourceGroupName $rgNameTemp `

-TemplateParameterObject $templateParams `

-TemplateUri 'https://raw.githubusercontent.com/mcollier/copy-azure-managed-disk-images/master/azuredeploy.json' `

-Verbose

Azure CLI 2.0

az group create -l $location -n $resourceGroupTempName

imageId=$(az image show -g mcollier-managed-image -n image2 --query "id")

az group deployment create -g resourceGroupTempName --template-uri https://raw.githubusercontent.com/mcollier/copy-azure-managed-disk-images/master/azuredeploy.json --parameters "{\"vmName\":{\"value\": \"$vmName\"}, \"adminUsername\":{\"value\": \"$user\"}, \"adminPassword\":{\"value\": \"$pwd\"}, \"dnsLabelPrefix\":{\"value\": \"$dnsPrefix\"}, \"managedImageResourceId\":{\"value\": \"$imageId\"}}"

Delete the snapshots

Since the new image and temporary VM have been created in the target subscription, there is no longer a need for the snapshot. You would want to delete the snapshot in both the source and target Azure subscription.

PowerShell

<# -- Delete the snapshot in the second subscription -- #> Remove-AzureRmSnapshot -ResourceGroupName $resourceGroupName -SnapshotName $snapshotName -Force

Azure CLI 2.0

az snapshot delete -g $resourceGroupName -n $snapshotName

Delete the temporary VM

Earlier there was a step to create a temporary VM. The VM was created to trigger the data copy process. It serves no other purpose at this point, thus it is safe to delete. If you followed the earlier steps to create the temporary VM, it was created in its own resource group. Thus, simply delete the resource group.

PowerShell

Remove-AzureRmResourceGroup -Name $rgNameTemp -Force

Azure CLI 2.0

az group delete -n $resourceGroupName

Summary

As you can see, there are several steps necessary to copy a Managed Disk Image from one Azure subscription to another. The steps aren’t difficult, just a bit unintuitive at this point. This post should help make the process a bit easier to understand. When the ability to copy images across subscriptions and regions is available as a first-class feature in Azure (hopefully later this year), this post will be effectively obsolete. I’m OK with that. 🙂

Resources / More Information

- Azure Managed Disks

- “Azure Managed Disks Deep Dive, Lessons Learned and Benefits”, Igor Pagliai

- Manage VM images

- Full source code at https://github.com/mcollier/copy-azure-managed-disk-images.

I would like to thank Chetan Agarwal and Neil Mackenzie for their assistance in reviewing this post.